人脸检测

- github topics

- face_recognition: 本项目是世界上最简洁的人脸识别库,你可以使用Python和命令行工具提取、识别、操作人脸。本项目的人脸识别是基于业内领先的C++开源库 dlib中的深度学习模型,用Labeled Faces in the Wild人脸数据集进行测试,有高达99.38%的准确率。但对小孩和亚洲人脸的识别准确率尚待提升。

- deepface: 一张图片一张脸 Deepface is a lightweight face recognition and facial attribute analysis (age, gender, emotion and race) framework for python. It is a hybrid face recognition framework wrapping state-of-the-art models: VGG-Face, Google FaceNet, OpenFace, Facebook DeepFace, DeepID, ArcFace and Dlib.

- yolov5: YOLOv5 🚀 is a family of object detection architectures and models pretrained on the COCO dataset, and represents Ultralytics open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development.

人脸检测和属性分析By百度云

import requests

import base64

from PIL import Image

import matplotlib.pyplot as plt

image_path = './datasets/image/face_detection.jpg'

获取access_token函数

def func_face_get_baidu_access_token():

"""

获取baidu的access_token

:return access_token

"""

# encoding:utf-8

api_key = 'you_api_key'

secret_key = 'you_secret_key'

# client_id 为官网获取的AK, client_secret 为官网获取的SK

# host = 'https://aip.baidubce.com/oauth/2.0/token?grant_type=client_credentials&client_id=【官网获取的AK】&client_secret=【官网获取的SK】'

host = 'https://aip.baidubce.com/oauth/2.0/token?grant_type=client_credentials&client_id=%s&client_secret=%s' % (api_key, secret_key)

response = requests.get(host)

access_token = ''

if response:

res = response.json()

print(res)

access_token = res.get('access_token', '')

return access_token

access_token = func_face_get_baidu_access_token()

print(access_token)

{'refresh_token': '25.6fc065b464989fce6cbd41d4e6ae1b0a.315360000.1959759303.282335-25143866', 'expires_in': 2592000, 'session_key': '9mzdA5Pj7ZKeP5jo0GEKduVWm7i9fwTHWSpG06S/723YxM+caN9pBehP4cuPHZ3duhWbbqJ27F7p1oAVdqi/11rl5aG07g==', 'access_token': '24.896a37769e0b9eb3ee4548f4c8259ec7.2592000.1646991303.282335-25143866', 'scope': 'public vis-classify_dishes vis-classify_car brain_all_scope vis-classify_animal vis-classify_plant brain_object_detect brain_realtime_logo brain_dish_detect brain_car_detect brain_animal_classify brain_plant_classify brain_ingredient brain_advanced_general_classify brain_custom_dish vis-faceverify_FACE_V3 brain_poi_recognize brain_vehicle_detect brain_redwine brain_currency brain_vehicle_damage brain_multi_ object_detect wise_adapt lebo_resource_base lightservice_public hetu_basic lightcms_map_poi kaidian_kaidian ApsMisTest_Test权限 vis-classify_flower lpq_开放 cop_helloScope ApsMis_fangdi_permission smartapp_snsapi_base smartapp_mapp_dev_manage iop_autocar oauth_tp_app smartapp_smart_game_openapi oauth_sessionkey smartapp_swanid_verify smartapp_opensource_openapi smartapp_opensource_recapi fake_face_detect_开放Scope vis-ocr_虚拟人物助理 idl-video_虚拟人物助理 smartapp_component smartapp_search_plugin avatar_video_test b2b_tp_openapi b2b_tp_openapi_online', 'session_secret': '35e5528d67d5a4f50022fa47ad1c590e'}

24.896a37769e0b9eb3ee4548f4c8259ec7.2592000.1646991303.282335-25143866

获取识别结果函数

def func_face_get_face_detection_result(image_path, access_token):

"""

人脸检测

:param image_path: 图片地址

:param access_token: 鉴权

:return res: 检测结果

"""

request_url = "https://aip.baidubce.com/rest/2.0/face/v3/detect"

# 二进制方式打开图片文件

f = open(image_path, 'rb')

img = base64.b64encode(f.read())

params = {"image":img, "image_type": "BASE64", "max_face_num": 5, "face_field": "age,gender"}

# params = "{\"image\":\"027d8308a2ec665acb1bdf63e513bcb9\",\"image_type\":\"FACE_TOKEN\",\"face_field\":\"faceshape,facetype\"}"

request_url = request_url + "?access_token=" + access_token

headers = {'content-type': 'application/json'}

response = requests.post(request_url, data=params, headers=headers)

res = {}

if response:

res = response.json()

response.close()

return res

print(func_face_get_face_detection_result(image_path, access_token=access_token))

{'error_code': 0, 'error_msg': 'SUCCESS', 'log_id': 2103952089, 'timestamp': 1644399303, 'cached': 0, 'result': {'face_num': 3, 'face_list': [{'face_token': '8e645a3f8b54fbf6b139e021c55dc7f0', 'location': {'left': 561.41, 'top': 221.47, 'width': 65, 'height': 68, 'rotation': 1}, 'face_probability': 1, 'angle': {'yaw': 77, 'pitch': 15, 'roll': -11.89}, 'age': 24, 'gender': {'type': 'male', 'probability': 1}}, {'face_token': '6db2d69550c91ed03b2b408ca15dd591', 'location': {'left': 756.48, 'top': 251.15, 'width': 65, 'height': 68, 'rotation': 14}, 'face_probability': 1, 'angle': {'yaw': 76.54, 'pitch': 18.85, 'roll': -1.31}, 'age': 22, 'gender': {'type': 'female', 'probability': 0.98}}, {'face_token': 'e66641016cc327aa7e7fb8543ee7116a', 'location': {'left': 400.68, 'top': 249.63, 'width': 62, 'height': 58, 'rotation': -6}, 'face_probability': 1, 'angle': {'yaw': -33.15, 'pitch': 12.93, 'roll': -0.94}, 'age': 22, 'gender': {'type': 'female', 'probability': 1}}]}}

image = Image.open(image_path)

plt.imshow(image)

plt.show()

批量识别结果函数

# def func_face_batch_detection():

# """

# 批量识别

# """

# image_path_list = sorted(os.listdir(image_file_path), key=lambda x: int(x.split('.')[0]))

# print(image_path_list)

# print('\n')

# with open(image_face_detection_result_path, mode='a+', encoding='utf-8') as f_w:

# for image_name in image_path_list:

# image_id = image_name.split('.')[0]

# image_path = os.path.join(image_file_path, image_name)

# print('image_id: {}, image_path: {}'.format(image_id, image_path))

# try:

# image_res = func_face_get_face_detection_result(image_path, access_token)

# except Exception as e:

# print(type(e).__name__)

# image_res = {}

# print('image_res: {}'.format(image_res))

# f_w.write(image_id + '\t' + json.dumps(image_res) + '\n')

# print('\n')

# time.sleep(5)

# func_face_batch_detection()

识别结果可视化

# with open(image_face_detection_result_path, mode='r', encoding='utf-8') as f:

# for line in f:

# line = line.strip()

# image_id, image_res = line.split('\t')

# image_res = json.loads(image_res)

# image_path = os.path.join(image_file_path, image_id+'.jpg')

# print('image_path: {} \n'.format(image_path))

# # if image_id == '5':

# # break

# # # 图片可视化

# try:

# image = np.array(plt.imread(image_path))

# # print(image_id, image.shape)

# # plt.figure(figsize=(9, 9))

# # plt.imshow(image)

# result = image_res.get('result', {})

# face_list = result.get('face_list', [])

# location_list = []

# for i in face_list:

# location_list.append(i.get('location', {}))

# # print(location_list)

# # print(len(location_list))

# # 画出检测到的文本框

# plt.figure(figsize=(9, 9))

# plt.imshow(image)

# for box in location_list:

# top = box.get('top', 0) # 表示定位位置的长方形左上顶点的垂直坐标

# left = box.get('left', 0) # 表示定位位置的长方形左上顶点的水平坐标

# width = box.get('width', 0) # 表示定位位置的长方形的宽度

# height = box.get('height', 0) # 表示定位位置的长方形的高度

# rotation = box.get('rotation', 0) # 人脸框相对于竖直方向的顺时针旋转角,[-180,180]

# plt.plot([left, left+width], [top, top], 'r', linewidth=1.5)

# plt.plot([left+width, left+width], [top, top+height], 'r', linewidth=1.5)

# plt.plot([left+width, left], [top+height, top+height], 'r', linewidth=1.5)

# plt.plot([left, left], [top+height, top], 'r', linewidth=1.5)

# plt.savefig(os.path.join(image_face_detection_dir_result_path, image_id+'.jpg'))

# plt.show()

# except Exception as e:

# print(type(e).__name__)

# command = 'cp %s %s' % (os.path.join(image_file_path, image_id+'.jpg'), os.path.join(image_face_detection_dir_result_path, image_id+'.jpg'))

# if os.system(command) != 0:

# print('cp error!')

# # break

人脸检测By face_recognition

从图片中识别人脸位置

from PIL import Image

import face_recognition

%matplotlib inline

image_path = './datasets/image/biden.jpg'

image = face_recognition.load_image_file(image_path)

%%time

# face_locations = face_recognition.face_locations(image)

face_locations = face_recognition.face_locations(image, number_of_times_to_upsample=0, model='cnn')

print('found {} face(s) in this photograph.'.format(len(face_locations)))

found 1 face(s) in this photograph.

CPU times: user 1.06 s, sys: 786 ms, total: 1.85 s

Wall time: 8.09 s

for face_location in face_locations:

top, right, bottom, left = face_location

print('a face is located at pixel location Top:{}, Left:{}, Bottom:{}, Right:{}'.format(top, left, bottom, right))

face_image = image[top:bottom, left:right]

pil_image = Image.fromarray(face_image)

pil_image.show()

a face is located at pixel location Top:235, Left:428, Bottom:518, Right:712

从视频中识别出人脸位置和其名字

import cv2

import face_recognition

# 打开视频

# input_video_path = './datasets/video/hamilton_clip.mp4'

# input_video_path = './datasets/video/song.mp4'

input_video_path = './datasets/video/laowang.mp4'

output_video_path = './datasets/video/%s_output.avi' % input_video_path.rsplit('/', 1)[1].split('.')[0]

output_video_path

'./datasets/video/laowang_output.avi'

input_video = cv2.VideoCapture(input_video_path)

frame_count = int(input_video.get(cv2.CAP_PROP_FRAME_COUNT)) # 视频帧数

frame_count

1456

frame_height = int(input_video.get(cv2.CAP_PROP_FRAME_HEIGHT)) # 视频高度

frame_width = int(input_video.get(cv2.CAP_PROP_FRAME_WIDTH)) # 视频宽度

frame_rate = input_video.get(cv2.CAP_PROP_FPS) # 帧速率

frame_height, frame_width, frame_rate

(1280, 720, 25.0)

# 创建输出电影文件(确保分辨率/帧速率匹配输入视频!)

# VideoWriter_fourcc为视频编解码器

# fourcc = cv2.VideoWriter_fourcc(*'XVID')

fourcc = cv2.VideoWriter_fourcc('X', 'V', 'I', 'D') # ,该参数是MPEG-4编码类型,文件名后缀为.avi

# 29.97为帧播放速率,(640,360)为视频帧大小

# output_video = cv2.VideoWriter(output_video_path, fourcc, 29.97, (640, 360))

output_video = cv2.VideoWriter(output_video_path, fourcc, frame_rate, (frame_width, frame_height))

# 加载一些示例图片并学习如何识别它们

lmm_image = face_recognition.load_image_file('./datasets/image/lin-manuel-miranda.png')

lmm_face_encodings = face_recognition.face_encodings(lmm_image)[0]

al_image = face_recognition.load_image_file('./datasets/image/alex-lacamoire.png')

al_face_encodings = face_recognition.face_encodings(al_image)[0]

known_faces = [lmm_face_encodings, al_face_encodings]

known_names = ['Lin-Manuel Miranda', 'Alex Lacamoire']

# 初始化一些变量

face_locations = []

face_encodings = []

face_names = []

frame_number = 0

%%time

while True:

# Grab a single frame of video

ret, frame = input_video.read()

frame_number += 1

# quit when the input video file ends

if not ret:

break

# 将图像从BGR颜色(OpenCV使用的)转换为RGB颜色(人脸识别使用的)

rgb_frame = frame[:, :, ::-1]

# 找出当前视频帧中所有的人脸和人脸编码

face_locations = face_recognition.face_locations(rgb_frame)

face_encodings = face_recognition.face_encodings(rgb_frame, face_locations)

face_names = []

for face_encoding in face_encodings:

# 看看这张脸和已知的脸是否匹配

match = face_recognition.compare_faces(known_faces, face_encoding, tolerance=0.50)

name = 'unknown'

if match[0]:

name = known_names[0]

elif match[1]:

name = known_names[1]

face_names.append(name)

# label the results

for (top, right, bottom, left), name in zip(face_locations, face_names):

# draw a box around the face

# (0,0,255)对应颜色(BGR),2对应线粗细

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

font = cv2.FONT_HERSHEY_DUPLEX # 正常大小无衬线字体

cv2.putText(frame, name, (left + 6, bottom - 6), font, 0.5, (255, 255, 255), 1)

if frame_number % 100 == 0:

print('writing frame {} / {}'.format(frame_number, frame_count))

output_video.write(frame)

writing frame 100 / 1456

writing frame 200 / 1456

writing frame 300 / 1456

writing frame 400 / 1456

writing frame 500 / 1456

writing frame 600 / 1456

writing frame 700 / 1456

writing frame 800 / 1456

writing frame 900 / 1456

writing frame 1000 / 1456

writing frame 1100 / 1456

writing frame 1200 / 1456

writing frame 1300 / 1456

writing frame 1400 / 1456

CPU times: user 13min 21s, sys: 2.47 s, total: 13min 23s

Wall time: 13min 13s

# all done

input_video.release() # 释放视频流

cv2.destroyAllWindows() # 关闭所有窗口

从批量frame中识别人脸位置

# import cv2

# import face_recognition

# input_video_path = './datasets/video/hamilton_clip.mp4'

# input_video = cv2.VideoCapture(input_video_path)

# frames = []

# frame_number = 0

# batch_size = 64

# while input_video.isOpened():

# ret, frame = input_video.read()

# if not ret:

# break

# frame = frame[:, :, ::-1]

# frame_number += 1

# frames.append(frame)

# if len(frames) == batch_size:

# batch_of_face_locations = face_recognition.batch_face_locations(frames, number_of_times_to_upsample=1, batch_size=batch_size)

# for frame_number_in_batch, face_locations in enumerate(batch_of_face_locations):

# number_of_faces_in_frame = len(face_locations)

# if number_of_faces_in_frame > 0:

# frame_idx = frame_number - batch_size + frame_number_in_batch

# print('found {} face(s) in frame #{}.'.format(number_of_faces_in_frame, frame_idx))

# for face_location in face_locations:

# top, right, bottom, left = face_location

# print('- a face is located at pixel location top:{}, left:{}, bottom:{}, right:{}'.format(top, left, bottom, right))

# frames = []

从图片中识别人脸位置并画出框

import face_recognition

from PIL import Image, ImageDraw

import numpy as np

obama_image = face_recognition.load_image_file('./datasets/image/obama.jpg')

obama_face_encoding = face_recognition.face_encodings(obama_image)[0]

# 这里有可能会出现报错,可能是你tensorflow的进程占用了GPU,导致这边调用不了,

# 将所有kernel重置再运行该代码就好了

biden_image = face_recognition.load_image_file('./datasets/image/biden.jpg')

biden_face_encoding = face_recognition.face_encodings(biden_image)[0]

known_face_encodings = [

obama_face_encoding,

biden_face_encoding

]

known_face_names = [

"Barack Obama",

"Joe Biden"

]

unknown_image = face_recognition.load_image_file('./datasets/image/two_people.jpg')

face_locations = face_recognition.face_locations(unknown_image, number_of_times_to_upsample=1, model='cnn')

face_encodings = face_recognition.face_encodings(unknown_image, known_face_locations=face_locations, num_jitters=1, model='small')

pil_image = Image.fromarray(unknown_image)

draw = ImageDraw.Draw(pil_image)

for (top, right, bottom, left), face_encoding in zip(face_locations, face_encodings):

matches = face_recognition.compare_faces(known_face_encodings=known_face_encodings, face_encoding_to_check=face_encoding, tolerance=0.6)

name = 'unknown'

face_distances = face_recognition.face_distance(face_encodings=known_face_encodings, face_to_compare=face_encoding)

best_match_index = np.argmin(face_distances)

if matches[best_match_index]:

name = known_face_names[best_match_index]

draw.rectangle(((left, top), (right, bottom)), outline=(0, 0, 255))

text_width, text_height = draw.textsize(name)

draw.rectangle(((left, bottom - text_height - 10), (right, bottom)), fill=(0, 0, 255), outline=(0, 0, 255))

draw.text((left + 6, bottom - text_height - 5), name, fill=(255, 255, 255, 255))

del draw

pil_image.show()

# pil_image.save(output_image_path)

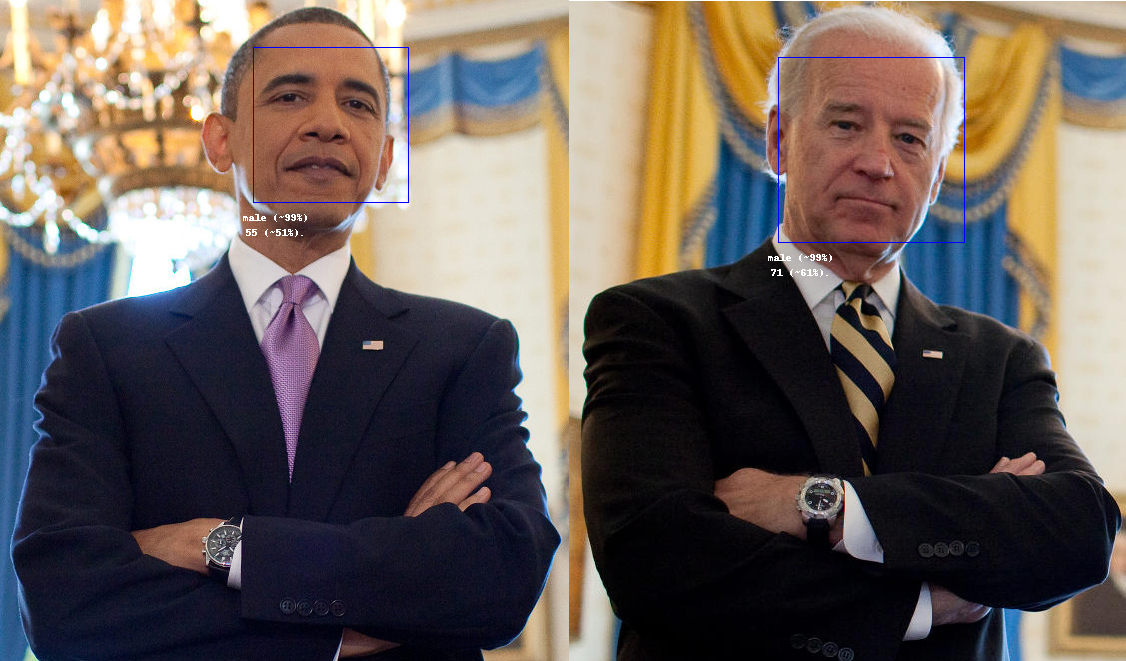

人脸性别年龄识别

- age-gender-estimation

- age-and-gender

- Age and Gender Prediction From Face Images Using Attentional Convolutional Network

识别示例 By age-and-gender

from age_and_gender import *

from PIL import Image, ImageDraw, ImageFont

landmarks_model_path = './datasets/model/age_and_gender/shape_predictor_5_face_landmarks.dat'

gender_model_path = './datasets/model/age_and_gender/dnn_gender_classifier_v1.dat'

age_model_path = './datasets/model/age_and_gender/dnn_age_predictor_v1.dat'

input_image_path = './datasets/image/face_detection.jpg'

input_image_path = './datasets/image/two_people.jpg'

age_gender_model = AgeAndGender()

age_gender_model.load_shape_predictor(landmarks_model_path)

age_gender_model.load_dnn_age_predictor(age_model_path)

age_gender_model.load_dnn_gender_classifier(gender_model_path)

image = Image.open(input_image_path).convert('RGB')

rec_result = age_gender_model.predict(image)

rec_result

[{'gender': {'value': 'male', 'confidence': 99},

'age': {'value': 55, 'confidence': 60},

'face': [244, 62, 394, 211]},

{'gender': {'value': 'male', 'confidence': 99},

'age': {'value': 70, 'confidence': 72},

'face': [792, 95, 941, 244]}]

draw = ImageDraw.Draw(image)

for info in rec_result:

gender = info.get('gender', {}).get('value', 'unknown')

gender_confidence = info.get('gender', {}).get('confidence', 100)

age = info.get('age', {}).get('value', 999)

age_confidence = info.get('age', {}).get('confidence', 100)

face_location = info.get('face', [])

left, top, right, bottom = face_location[0], face_location[1], face_location[2], face_location[3]

draw.rectangle(((left, top), (right, bottom)), outline=(0, 0, 255))

draw.text((left - 10, bottom + 10), f"{gender} (~{gender_confidence}%)\n{age} (~{age_confidence}%).", fill=(255, 255, 255, 255), align='center')

del draw

image.show()

识别示例 By face_recognition 和 age-and-gender

图片

from age_and_gender import *

from PIL import Image, ImageDraw, ImageFont

import numpy as np

import face_recognition

landmarks_model_path = './datasets/model/age_and_gender/shape_predictor_5_face_landmarks.dat'

gender_model_path = './datasets/model/age_and_gender/dnn_gender_classifier_v1.dat'

age_model_path = './datasets/model/age_and_gender/dnn_age_predictor_v1.dat'

input_image_path = './datasets/image/face_detection.jpg'

input_image_path = './datasets/image/two_people.jpg'

age_gender_model = AgeAndGender()

age_gender_model.load_shape_predictor(landmarks_model_path)

age_gender_model.load_dnn_age_predictor(age_model_path)

age_gender_model.load_dnn_gender_classifier(gender_model_path)

image = Image.open(input_image_path).convert('RGB')

face_locations = face_recognition.face_locations(np.asarray(image), model='hog')

rec_result = age_gender_model.predict(image, face_locations)

rec_result

[{'gender': {'value': 'male', 'confidence': 99},

'age': {'value': 71, 'confidence': 61},

'face': [778, 57, 964, 242]},

{'gender': {'value': 'male', 'confidence': 99},

'age': {'value': 55, 'confidence': 51},

'face': [253, 47, 408, 202]}]

draw = ImageDraw.Draw(image)

for info in rec_result:

gender = info.get('gender', {}).get('value', 'unknown')

gender_confidence = info.get('gender', {}).get('confidence', 100)

age = info.get('age', {}).get('value', 999)

age_confidence = info.get('age', {}).get('confidence', 100)

face_location = info.get('face', [])

left, top, right, bottom = face_location[0], face_location[1], face_location[2], face_location[3]

draw.rectangle(((left, top), (right, bottom)), outline=(0, 0, 255))

draw.text((left - 10, bottom + 10), f"{gender} (~{gender_confidence}%)\n{age} (~{age_confidence}%).", fill=(255, 255, 255, 255), align='center')

del draw

image.show()

视频

import cv2

from age_and_gender import *

from PIL import Image, ImageDraw, ImageFont

import numpy as np

import face_recognition

landmarks_model_path = './datasets/model/age_and_gender/shape_predictor_5_face_landmarks.dat'

gender_model_path = './datasets/model/age_and_gender/dnn_gender_classifier_v1.dat'

age_model_path = './datasets/model/age_and_gender/dnn_age_predictor_v1.dat'

# input_video_path = './datasets/video/hamilton_clip.mp4'

# input_video_path = './datasets/video/song.mp4'

input_video_path = './datasets/video/laowang.mp4'

output_video_path = './datasets/video/%s_output.avi' % input_video_path.rsplit('/', 1)[1].split('.')[0]

output_video_path

'./datasets/video/laowang_output.avi'

# 打开性别年龄模型

age_gender_model = AgeAndGender()

age_gender_model.load_shape_predictor(landmarks_model_path)

age_gender_model.load_dnn_age_predictor(age_model_path)

age_gender_model.load_dnn_gender_classifier(gender_model_path)

# 打开视频

input_video = cv2.VideoCapture(input_video_path)

frame_count = int(input_video.get(cv2.CAP_PROP_FRAME_COUNT)) # 视频帧数

frame_count

1456

frame_height = int(input_video.get(cv2.CAP_PROP_FRAME_HEIGHT)) # 视频高度

frame_width = int(input_video.get(cv2.CAP_PROP_FRAME_WIDTH)) # 视频宽度

frame_rate = input_video.get(cv2.CAP_PROP_FPS) # 帧速率

frame_height, frame_width, frame_rate

(1280, 720, 25.0)

# 创建输出电影文件(确保分辨率/帧速率匹配输入视频!)

# VideoWriter_fourcc为视频编解码器

# fourcc = cv2.VideoWriter_fourcc(*'XVID')

fourcc = cv2.VideoWriter_fourcc('X', 'V', 'I', 'D') # ,该参数是MPEG-4编码类型,文件名后缀为.avi

# 29.97为帧播放速率,(640,360)为视频帧大小

# output_video = cv2.VideoWriter(output_video_path, fourcc, 29.97, (640, 360))

output_video = cv2.VideoWriter(output_video_path, fourcc, frame_rate, (frame_width, frame_height))

# 初始化一些变量

frame_number = 0

%%time

while True:

# Grab a single frame of video

ret, frame = input_video.read()

frame_number += 1

# quit when the input video file ends

if not ret:

break

# 将图像从BGR颜色(OpenCV使用的)转换为RGB颜色(人脸识别使用的)

rgb_frame = frame[:, :, ::-1]

# 找出当前视频帧中所有的人脸和人脸编码

face_locations = face_recognition.face_locations(rgb_frame, model='cnn')

rec_result = age_gender_model.predict(rgb_frame, face_locations)

for info in rec_result:

gender = info.get('gender', {}).get('value', 'unknown')

gender_confidence = info.get('gender', {}).get('confidence', 100)

age = info.get('age', {}).get('value', 999)

age_confidence = info.get('age', {}).get('confidence', 100)

face_location = info.get('face', [])

left, top, right, bottom = face_location[0], face_location[1], face_location[2], face_location[3]

# draw a box around the face

# (0,0,255)对应颜色(BGR),2对应线粗细

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

font = cv2.FONT_HERSHEY_DUPLEX # 正常大小无衬线字体

name = f"{gender} (~{gender_confidence}%)\n {age} (~{age_confidence}%)."

cv2.putText(frame, name, (left - 6, bottom + 10), font, 0.5, (255, 255, 255), 1)

if frame_number % 100 == 0:

print('writing frame {} / {}'.format(frame_number, frame_count))

output_video.write(frame)

writing frame 100 / 1456

writing frame 200 / 1456

writing frame 300 / 1456

writing frame 400 / 1456

writing frame 500 / 1456

writing frame 600 / 1456

writing frame 700 / 1456

writing frame 800 / 1456

writing frame 900 / 1456

writing frame 1000 / 1456

writing frame 1100 / 1456

writing frame 1200 / 1456

writing frame 1300 / 1456

writing frame 1400 / 1456

CPU times: user 7min 54s, sys: 25.1 s, total: 8min 19s

Wall time: 8min 8s

# all done

input_video.release() # 释放视频流

cv2.destroyAllWindows() # 关闭所有窗口

识别示例 By deepface 推荐

视频

- 先face_recoginition

- 再deepface

import cv2

from age_and_gender import *

from PIL import Image, ImageDraw, ImageFont

import numpy as np

import face_recognition

from deepface import DeepFace

# input_video_path = './datasets/video/hamilton_clip.mp4'

# input_video_path = './datasets/video/song.mp4'

input_video_path = './datasets/video/laowang.mp4'

output_video_path = './datasets/video/%s_output.avi' % input_video_path.rsplit('/', 1)[1].split('.')[0]

output_video_path

'./datasets/video/laowang_output.avi'

# 打开视频

input_video = cv2.VideoCapture(input_video_path)

frame_count = int(input_video.get(cv2.CAP_PROP_FRAME_COUNT)) # 视频帧数

frame_count

1456

frame_height = int(input_video.get(cv2.CAP_PROP_FRAME_HEIGHT)) # 视频高度

frame_width = int(input_video.get(cv2.CAP_PROP_FRAME_WIDTH)) # 视频宽度

frame_rate = input_video.get(cv2.CAP_PROP_FPS) # 帧速率

frame_height, frame_width, frame_rate

(1280, 720, 25.0)

# 创建输出电影文件(确保分辨率/帧速率匹配输入视频!)

# VideoWriter_fourcc为视频编解码器

# fourcc = cv2.VideoWriter_fourcc(*'XVID')

fourcc = cv2.VideoWriter_fourcc('X', 'V', 'I', 'D') # ,该参数是MPEG-4编码类型,文件名后缀为.avi

# 29.97为帧播放速率,(640,360)为视频帧大小

# output_video = cv2.VideoWriter(output_video_path, fourcc, 29.97, (640, 360))

output_video = cv2.VideoWriter(output_video_path, fourcc, frame_rate, (frame_width, frame_height))

# 初始化一些变量

frame_number = -1

%%time

while True:

# Grab a single frame of video

ret, frame = input_video.read()

frame_number += 1

# quit when the input video file ends

if not ret:

break

# 将图像从BGR颜色(OpenCV使用的)转换为RGB颜色(人脸识别使用的)

rgb_frame = frame[:, :, ::-1]

# 找出当前视频帧中所有的人脸和人脸编码

face_locations = face_recognition.face_locations(rgb_frame, model='cnn')

for top, right, bottom, left in face_locations:

image = rgb_frame[left:right, top:bottom]

try:

obj = DeepFace.analyze(img_path = image,

actions = ['age', 'gender'],#, 'race', 'emotion'],

enforce_detection=False,

detector_backend='dlib',

prog_bar=False)

except:

obj = {}

age = obj.get('age', 'unknown')

gender = obj.get('gender', 'unknown')

# draw a box around the face

# (0,0,255)对应颜色(BGR),2对应线粗细

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

font = cv2.FONT_HERSHEY_DUPLEX # 正常大小无衬线字体

name = f"gender: {gender}, age: {age}."

cv2.putText(frame, name, (left - 6, bottom + 10), font, 0.5, (255, 255, 255), 1)

if frame_number % 100 == 0:

print('writing frame {} / {}'.format(frame_number, frame_count))

output_video.write(frame)

writing frame 0 / 1456

writing frame 100 / 1456

writing frame 200 / 1456

writing frame 300 / 1456

writing frame 400 / 1456

writing frame 500 / 1456

writing frame 600 / 1456

writing frame 700 / 1456

writing frame 800 / 1456

writing frame 900 / 1456

writing frame 1000 / 1456

writing frame 1100 / 1456

writing frame 1200 / 1456

writing frame 1300 / 1456

writing frame 1400 / 1456

CPU times: user 10min 32s, sys: 2min 19s, total: 12min 51s

Wall time: 11min 21s

视频

- 不用先face_recoginition

- 直接检测+age_gender一起上

# import cv2

# from age_and_gender import *

# from PIL import Image, ImageDraw, ImageFont

# import numpy as np

# import face_recognition

# from deepface import DeepFace

# # input_video_path = './datasets/video/hamilton_clip.mp4'

# # input_video_path = './datasets/video/song.mp4'

# input_video_path = './datasets/video/laowang.mp4'

# output_video_path = './datasets/video/%s_output.avi' % input_video_path.rsplit('/', 1)[1].split('.')[0]

# output_video_path

# # 打开视频

# input_video = cv2.VideoCapture(input_video_path)

# frame_count = int(input_video.get(cv2.CAP_PROP_FRAME_COUNT)) # 视频帧数

# frame_count

# frame_height = int(input_video.get(cv2.CAP_PROP_FRAME_HEIGHT)) # 视频高度

# frame_width = int(input_video.get(cv2.CAP_PROP_FRAME_WIDTH)) # 视频宽度

# frame_rate = input_video.get(cv2.CAP_PROP_FPS) # 帧速率

# frame_height, frame_width, frame_rate

# # 创建输出电影文件(确保分辨率/帧速率匹配输入视频!)

# # VideoWriter_fourcc为视频编解码器

# # fourcc = cv2.VideoWriter_fourcc(*'XVID')

# fourcc = cv2.VideoWriter_fourcc('X', 'V', 'I', 'D') # ,该参数是MPEG-4编码类型,文件名后缀为.avi

# # 29.97为帧播放速率,(640,360)为视频帧大小

# # output_video = cv2.VideoWriter(output_video_path, fourcc, 29.97, (640, 360))

# output_video = cv2.VideoWriter(output_video_path, fourcc, frame_rate, (frame_width, frame_height))

# # 初始化一些变量

# frame_number = -1

# %%time

# while True:

# # Grab a single frame of video

# ret, frame = input_video.read()

# frame_number += 1

# # quit when the input video file ends

# if not ret:

# break

# # 将图像从BGR颜色(OpenCV使用的)转换为RGB颜色(人脸识别使用的)

# rgb_frame = frame[:, :, ::-1]

# # 找出当前视频帧中所有的人脸和人脸编码

# obj = DeepFace.analyze(img_path = rgb_frame, actions = ['age', 'gender'], enforce_detection=False, detector_backend='dlib', prog_bar=False)

# age = obj.get('age', 'unknown')

# gender = obj.get('gender', 'unknown')

# region = obj.get('region', {})

# if region:

# x = region.get('x', 0)

# y = region.get('y', 0)

# w = region.get('w', 0)

# h = region.get('h', 0)

# # draw a box around the face

# # (0,0,255)对应颜色(BGR),2对应线粗细

# cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 0, 255), 2)

# font = cv2.FONT_HERSHEY_DUPLEX # 正常大小无衬线字体

# name = f"gender: {gender}, age: {age}."

# cv2.putText(frame, name, (x - 6, y + h + 10), font, 0.5, (255, 255, 255), 1)

# if frame_number % 100 == 0:

# print('writing frame {} / {}'.format(frame_number, frame_count))

# output_video.write(frame)

人数识别

import cv2

import face_recognition

# 打开视频

input_video_path = './datasets/video/hamilton_clip.mp4'

# input_video_path = './datasets/video/song.mp4'

# input_video_path = './datasets/video/laowang.mp4'

input_video = cv2.VideoCapture(input_video_path)

frame_count = int(input_video.get(cv2.CAP_PROP_FRAME_COUNT)) # 视频帧数

frame_count

2356

frame_height = int(input_video.get(cv2.CAP_PROP_FRAME_HEIGHT)) # 视频高度

frame_width = int(input_video.get(cv2.CAP_PROP_FRAME_WIDTH)) # 视频宽度

frame_rate = input_video.get(cv2.CAP_PROP_FPS) # 帧速率

frame_height, frame_width, frame_rate

(360, 640, 29.97)

known_faces = []

# 初始化一些变量

face_locations = []

face_encodings = []

frame_number = 0

face_count = 0

%%time

while True:

# Grab a single frame of video

ret, frame = input_video.read()

frame_number += 1

# quit when the input video file ends

if not ret:

break

# 将图像从BGR颜色(OpenCV使用的)转换为RGB颜色(人脸识别使用的)

rgb_frame = frame[:, :, ::-1]

# 找出当前视频帧中所有的人脸和人脸编码

face_locations = face_recognition.face_locations(rgb_frame, model='cnn')

face_encodings = face_recognition.face_encodings(rgb_frame, face_locations)

for face_encoding in face_encodings:

# 看看这张脸和已知的脸是否匹配

match = face_recognition.compare_faces(known_faces, face_encoding, tolerance=0.6)

if any(match) :

pass

else:

face_count += 1

known_faces.append(face_encoding)

if frame_number % 100 == 0:

# print(match)

print('processing frame {} / {}'.format(frame_number, frame_count))

processing frame 100 / 2356

processing frame 200 / 2356

processing frame 300 / 2356

processing frame 400 / 2356

processing frame 500 / 2356

processing frame 600 / 2356

processing frame 700 / 2356

processing frame 800 / 2356

processing frame 900 / 2356

processing frame 1000 / 2356

processing frame 1100 / 2356

processing frame 1200 / 2356

processing frame 1300 / 2356

processing frame 1400 / 2356

processing frame 1500 / 2356

processing frame 1600 / 2356

processing frame 1700 / 2356

processing frame 1800 / 2356

processing frame 1900 / 2356

processing frame 2000 / 2356

processing frame 2100 / 2356

processing frame 2200 / 2356

processing frame 2300 / 2356

CPU times: user 1min 57s, sys: 17 s, total: 2min 14s

Wall time: 2min 11s

face_count

2

from IPython.display import Video

Video(input_video_path)

整合:人数+性别+年龄识别

import cv2

from age_and_gender import *

from PIL import Image, ImageDraw, ImageFont

import numpy as np

import face_recognition

landmarks_model_path = './datasets/model/age_and_gender/shape_predictor_5_face_landmarks.dat'

gender_model_path = './datasets/model/age_and_gender/dnn_gender_classifier_v1.dat'

age_model_path = './datasets/model/age_and_gender/dnn_age_predictor_v1.dat'

input_video_path = './datasets/video/hamilton_clip.mp4'

# input_video_path = './datasets/video/song.mp4'

# input_video_path = './datasets/video/laowang.mp4'

output_video_path = './datasets/video/%s_output.avi' % input_video_path.rsplit('/', 1)[1].split('.')[0]

output_video_path

'./datasets/video/hamilton_clip_output.avi'

# 打开性别年龄模型

age_gender_model = AgeAndGender()

age_gender_model.load_shape_predictor(landmarks_model_path)

age_gender_model.load_dnn_age_predictor(age_model_path)

age_gender_model.load_dnn_gender_classifier(gender_model_path)

# 打开视频

input_video = cv2.VideoCapture(input_video_path)

frame_count = int(input_video.get(cv2.CAP_PROP_FRAME_COUNT)) # 视频帧数

frame_count

2356

frame_height = int(input_video.get(cv2.CAP_PROP_FRAME_HEIGHT)) # 视频高度

frame_width = int(input_video.get(cv2.CAP_PROP_FRAME_WIDTH)) # 视频宽度

frame_rate = input_video.get(cv2.CAP_PROP_FPS) # 帧速率

frame_height, frame_width, frame_rate

(360, 640, 29.97)

# 创建输出电影文件(确保分辨率/帧速率匹配输入视频!)

# VideoWriter_fourcc为视频编解码器

# fourcc = cv2.VideoWriter_fourcc(*'XVID')

fourcc = cv2.VideoWriter_fourcc('X', 'V', 'I', 'D') # ,该参数是MPEG-4编码类型,文件名后缀为.avi

# 29.97为帧播放速率,(640,360)为视频帧大小

# output_video = cv2.VideoWriter(output_video_path, fourcc, 29.97, (640, 360))

output_video = cv2.VideoWriter(output_video_path, fourcc, frame_rate, (frame_width, frame_height))

# 初始化一些变量

frame_number = 0

known_faces = []

face_count = 0

%%time

while True:

# Grab a single frame of video

ret, frame = input_video.read()

frame_number += 1

# quit when the input video file ends

if not ret:

break

# 将图像从BGR颜色(OpenCV使用的)转换为RGB颜色(人脸识别使用的)

rgb_frame = frame[:, :, ::-1]

# 找出当前视频帧中所有的人脸和人脸编码

face_locations = face_recognition.face_locations(rgb_frame, model='cnn')

face_encodings = face_recognition.face_encodings(rgb_frame, face_locations)

# 人数识别

for face_encoding in face_encodings:

# 看看这张脸和已知的脸是否匹配

match = face_recognition.compare_faces(known_faces, face_encoding, tolerance=0.6)

if any(match) :

pass

else:

face_count += 1

known_faces.append(face_encoding)

# 年龄性别识别

rec_result = age_gender_model.predict(rgb_frame, face_locations)

# 画出框及属性

for info in rec_result:

gender = info.get('gender', {}).get('value', 'unknown')

gender_confidence = info.get('gender', {}).get('confidence', 100)

age = info.get('age', {}).get('value', 999)

age_confidence = info.get('age', {}).get('confidence', 100)

face_location = info.get('face', [])

left, top, right, bottom = face_location[0], face_location[1], face_location[2], face_location[3]

# draw a box around the face

# (0,0,255)对应颜色(BGR),2对应线粗细

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

font = cv2.FONT_HERSHEY_DUPLEX # 正常大小无衬线字体

name = f"gender: {gender} (~{gender_confidence}%)."

cv2.putText(frame, name, (left - 6, bottom + 50), font, 0.5, (255, 255, 255), 1)

name = f"age: {age} (~{age_confidence}%)."

cv2.putText(frame, name, (left - 6, bottom + 30), font, 0.5, (255, 255, 255), 1)

name = f"face_count: {face_count}."

cv2.putText(frame, name, (left - 6, bottom + 10), font, 0.5, (255, 255, 255), 1)

if frame_number % 100 == 0:

print('writing frame {} / {}'.format(frame_number, frame_count))

output_video.write(frame)

writing frame 100 / 2356

writing frame 200 / 2356

writing frame 300 / 2356

writing frame 400 / 2356

writing frame 500 / 2356

writing frame 600 / 2356

writing frame 700 / 2356

writing frame 800 / 2356

writing frame 900 / 2356

writing frame 1000 / 2356

writing frame 1100 / 2356

writing frame 1200 / 2356

writing frame 1300 / 2356

writing frame 1400 / 2356

writing frame 1500 / 2356

writing frame 1600 / 2356

writing frame 1700 / 2356

writing frame 1800 / 2356

writing frame 1900 / 2356

writing frame 2000 / 2356

writing frame 2100 / 2356

writing frame 2200 / 2356

writing frame 2300 / 2356

CPU times: user 7min 34s, sys: 19.3 s, total: 7min 53s

Wall time: 7min 49s

# all done

input_video.release() # 释放视频流

cv2.destroyAllWindows() # 关闭所有窗口